Introduction

This post covers the basic procedures to setup simple Mule API security. I assume that the audience have not knowledge of applying API Security on the Mule AnyPoint Platform. Here are the key take-aways

- Write a simple RAML in the Design Center of the Anypoint Platform

- Publish the API (RAML) to the Exchange

- Using API Manage to apply simple security

- Explain the details on how it works

The complete source code are available at my github: https://github.com/garyliu1119/api-manager-explained

Design & Publish API

In the new version of AnyPoint Platform, the api management contains 3 separate areas:

- Design Center

- Exchange

- API Manager

There are many editors we can use to design our API (RAML). I find design center and ATOM are the two most powerful tools. Both are easy to use. For the demo purpose, I use AnyPoint Platform's Design Center. First, create a new project as shown in the snapshot below:

Choose "API Specification", enter project name, then you can write your API in RAML. The details can be found in many documents. The code below is the simplest RAML file for the purpose of demonstrate API security.

#%RAML 1.0

title: Basic Auth API

version: v1

protocols: [ HTTP ]

baseUri: https://mocksvc.mulesoft.com/mocks/09212943-e570-413d-92f6-ef5e634f33cb/{version} # baseUri: http://esb.ggl-consulting.com/{version}

mediaType: application/json

securitySchemes:

basicAuth:

description: First simple auth

type: Basic Authentication

describedBy:

headers:

Authorization:

description: Base64-encoded "username:password"

type: string

responses:

401:

description: |

Unauthorized: username or password or the combination is invalid

types:

Account:

properties:

id: integer

type: string

name: string

Error:

properties:

code: integer

errorMessage: string

/accounts:

/{id}:

get:

description: get an account information by id

responses:

200:

body:

application/json:

type: Account

example: { "id": 1234, "name": "Gary Liu", "type": "checking" }

Once the API is completed, we need to published the api to the Exchange. To publish the API to Exchange, refer the snapshot below:

Now, we can view our API in the Exchange as shown in the following snapshot:

Once the API is published to the Exchange, we can go to API Manager to import the API as shown in the following snapshot:

We can view the API as shown in the following snapshots:

By viewing API, we need few important information for the purpose of auto-discovery. The Mule 3, we need "API Name" and "API Version", respectively. For Mule 4, we need API ID.

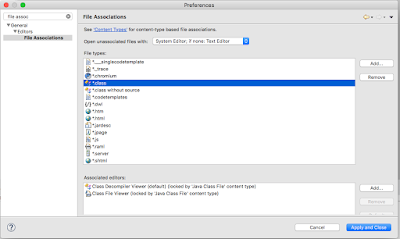

Setup The AnyPoint Studio

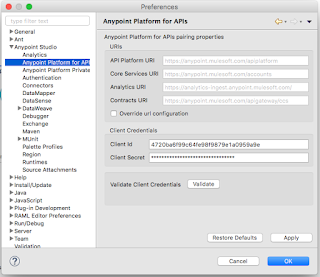

In order to apply the security policy to our local running applications, we need to connect our local runtime with Anypoint Platform. To do so, we need to apply client ID and client secret of our environment to the Anypoint Studio. Firsly, we need to get the client id and client secret: Access Management --> Environment (left panel) --> Environemnt (Sandbox):

Now, go to AnypointStudio, Preference --> Anypoint Platform For Apis --> fill the client id and client secret --> Validate:

Once you validate the client id and client secret, that means our AnypointStudio or the embedded runtime can communicate with the Anypoint Platform.

Apply Security Policy In API Manager

Once the API is imported to the API Manager, we can apply the security policies, SLA Tier, alert, etc. The main purpose of this post is to demo how to apply security policies. I will cover the other area in the later posts. In this case, I plan to apply simple security and Basic Http Authentication as shown in the following snapshot:

When you apply the simple security, the platform will ask for the user name and password. Note down these credentials, we will need them when we perform the http request.

At this point, we have setup the security for the API from administrative side. Now, we need to apply the security policy (user name and password) to our application. I will cover these in the next section.

Apply Security Policy To Mule Application Using Auto Discovery

The key to control application API access is via auto-discovery and communication between API Manager and application. To achieve auto-discovery of the application, or to let api manager control the application access, we need to create an auto-discovery component as shown in the following snapshots:

The apiName and version are from API Manager as shown in the following snapshots:

The apikitRef is our definition of API Router as shown in the following snapshots:

As we can see that the security policy will apply and api passing through the api router, which is referring our API definition of api-manager-explained.raml

Run The Application

To test the security policy applying to our application, we can use PostMan as shown in the following snapshots:

The Authorization is "Basic Auth", the user name and password are shown. PostMan will automatically generate the token which is base64. And PostMan will send Authorization : [{"key":"Authorization","type":"text","name":"Authorization","value":"Basic R2FyeTEyMzQ6R2FyeTEyMzQk"...] to the server.

We can also perform the same using curl. First we need to generate the basic token as the following:

gl17@garyliu17smbp:~$ echo "Gary1234:Gary1234$" | base64

R2FyeTEyMzQ6R2FyeTEyMzQkCg==

gl17@garyliu17smbp:~$

Then, we can send the request as the following:

curl -X GET -H "Authorization: Basic R2FyeTEyMzQ6R2FyeTEyMzQk" http://localhost:18081/api/accounts/1234

That is it. Even though it seems pretty complicated, actually this is simplest mechanism.

What Is Under The Hood?

At this point, we may ask ourselves the question: How does it work? How the Anypoint Platform enforce the security policies?

First of all, we noticed that when we run the application in our local, in our console, there are following lines:

The highlighted line showing that the policy has been applied successfully.

In the meantime, there is a file written in our workspace/.mule/http-basic-authentication-282686.xml as shown in the following snapshot:

And the contents of the file are the following:

As you can see, Mule is using spring security. Actually, we can do the exactly same in our mule configuration. Of course, I am not recommending to do so.

Another interesting point should be noted is the network connection. Here is what I can see from my local environment by using the command of lsof:

AnypointS 987 gl17 98u IPv6 0x3c5bcba541fe6469 0t0 TCP localhost:50687->localhost:6666 (ESTABLISHED)

AnypointS 987 gl17 223u IPv6 0x3c5bcba541fe9269 0t0 TCP localhost:50681->localhost:50683 (ESTABLISHED)

java 1107 gl17 4u IPv4 0x3c5bcba53fdc68b1 0t0 TCP localhost:50683->localhost:50681 (ESTABLISHED)

java 1107 gl17 498u IPv4 0x3c5bcba5417148b1 0t0 TCP localhost:6666->localhost:50687 (ESTABLISHED)

java 1107 gl17 526u IPv4 0x3c5bcba544131211 0t0 TCP garyliu17smbp.frontierlocal.net:50927->ec2-34-231-107-145.compute-1.amazonaws.com:https (ESTABLISHED)

The last line shows the TCP connection between Anypoint Platform and my local runtime.

Summary

In this post, I have shown the details of setup and applying API security to mule applications. I covered the underneath communication between local runtime and Anypoint Platform. In the following post I will cover client ID enforcement, another simple security mechanisms. At the end of this series, the reader should be able to master the API security related to the mule platform.